This will be the first in a series of articles discussing some of the more recent energy improvement methods available to data center operators. The series is meant to provide the reader with fact based information. It is meant to provide the operators with a basic understanding of the energy conservation measures and where they can be applied.

There is an interesting statistic that roughly 75% of existing data centers with under 15,000 square feet of white space never had energy conservations measures performed. In a recent presentation, I asked the audience how many of them implemented any energy savings measures in their facilities. Only two or three of a relatively large audience raised their hands. I then asked how many of the operators who did not raise their hands have corporate energy savings policies. About half of the audience raised their hands. When I asked a couple of participants what the reasons were for not implementing energy savings measures, they mainly talked about fear of disruption and downtime. It really came down to risk tolerance.

Let’s face it. We can talk about UTI tiering and the fact that uptime has percentages associated with it, but the “C” suite executives have an expectation of 100% uptime. Whether or not that is realistic does not matter much and this pressure to maintain uptime is pushed down to the operators. This makes operators a very conservative bunch.

Over the course of the last 10 or so years, there have been a number of advancements in mechanical and electrical technology that can provide significant data center energy savings. Many of these advancements are applicable to both new and existing installations, and are proven to be reliable through hours of real world operation. A number of them can be implemented in existing facilities with little or no disruption to operations and provide real energy savings. This is important whether you are operating a corporate data center where there is an energy policy in place, or colocation facilities where saving energy can make you more competitive in the market place.

Over the course of this series we will discuss energy saving methods from the simplest, to those that require more detailed investigation to determine applicability and capital expenditure to execute. So let’s begin with the simplest.

HOW HOT IS HOT?

When I began designing data centers almost 30 years ago, there was really just one way to design a data center. Sure there were variants, but the vast majority of data centers were designed with some sort of raised floor and perimeter computer room air conditioners (CRAC’s). Because of computer room equipment manufacturers’ requirements, data centers were kept around 68°F and 50% relative humidity (RH). These conditions were thought to provide the computer equipment with a cool and stable operating environment. As late as 1999, the American Society of Heating, Refrigerating and Air Condition Engineers (ASHRAE) was still stipulating a design standard of 72°F +/- 2°F and 50% RH +/- 5%.

When I began designing data centers almost 30 years ago, there was really just one way to design a data center. Sure there were variants, but the vast majority of data centers were designed with some sort of raised floor and perimeter computer room air conditioners (CRAC’s). Because of computer room equipment manufacturers’ requirements, data centers were kept around 68°F and 50% relative humidity (RH). These conditions were thought to provide the computer equipment with a cool and stable operating environment. As late as 1999, the American Society of Heating, Refrigerating and Air Condition Engineers (ASHRAE) was still stipulating a design standard of 72°F +/- 2°F and 50% RH +/- 5%.

In the early 2000s, ASHRAE empaneled a technical committee whose goal was to establish new guidelines for cooling data centers. The committee included representatives from cooling system manufacturers, the HVAC engineering community, data center operators and most importantly, the computer equipment manufacturers. They were involved because it was generally recognized that computer equipment manufacturers published operating condition requirements that limited how much an operator could change the conditions in the white space. It was felt that modern chip and circuit board manufacturers provided a product that could withstand a much broader range of temperature and humidity conditions. This thinking was bolstered, in part, by the broad range of conditions under which personal computers and process controllers were able to operate.

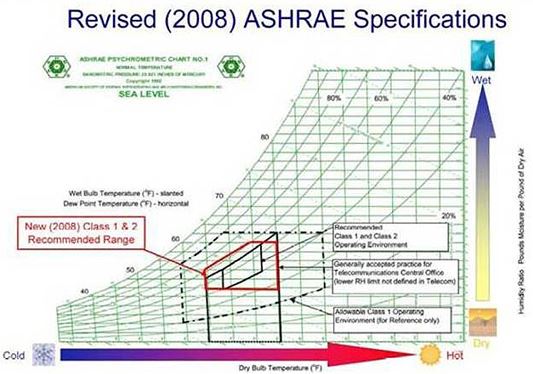

After a great deal of lab and field testing, ASHRAE published a Technical Committee (TC 9.9 – 2008) Standard in 2008. It was subsequently incorporated into the ASHRAE Applications Manual in 2011. That standard re-established the design conditions for data center white space. Under that standard the typical data center can operate from 65°F – 80°F and from 20% RH to 80% RH, so long as the conditions are relatively stable. To emphasize the need for stability, they limited the rate of change of space conditions to 3.5°F/hour and 5% RH/hour. Due to code requirements, new data centers are now designed to these conditions.

I cannot tell you how many data center operators have looked at me like I have a third eye when I tell them they ought to raise the temperature in their data center—but the results are real. For a typical data center, each degree the space temperature is raised, the HVAC system will use 2 – 5% less energy. The reality is that if your operators are able to work comfortably in the space, so can your computer equipment. Below are two real world examples of the implementation of changing space conditions.

Example A:

One of my clients is a large U.S. based financial institution. They had been operating in raised floor, 68°F conditions for as long as I had been working with them and their deployment included some legacy equipment. Their lead facilities operator had long thought the space temperature was too low and could be raised, but had no data on which to base his assumption. Shortly after TC 9.9 – 2008 was published, he decided to approach their CIO with the notion of raising the space temperature but was quickly shot down. Not one to be deterred, he came up with a scheme where he would raise the temperature in the data center ½ a degree F every month until he reached 75°F. This went on for months. No one noticed or became alarmed, and there were no over temperature alarms from their computer equipment.

One day the CIO happened to be going through the data center and noticed one of the CRAC displays was left on and indicating 74.5°F. Alarmed, he quickly went to the facility gentleman’s office and asked what was wrong with the with HVAC system. The facilities operator informed him there was no problem and the system had been operating that way for months without issue. They ultimately came to an agreement, and for a number of reasons, settled on 75°F as their operating set point.

Example B

Another of my clients is a major international Internet Service Provider (ISP). Their corporate philosophy is to be as environmentally friendly as possible. In addition, any energy cost savings contributes to their bottom line so they are extremely aggressive when it comes to energy savings. They mostly utilize traditional “off the shelf” servers and other computer equipment so there is nothing special about what they deployed. Their summertime data center set point is 80°F but it has been known to drift up to 85°F on high load days. They rarely see temperature alarms.

As part of their standard procedures the ISP performs failure analyses. When alarms and/or failures have occurred, they found the issue was not heat related, but an issue with the manufacturing of the device. In all cases, this has been verified by the device manufacturer.

SUMMARY

Of all the energy conservation methods, the simplest is to raise your data center temperature. As demonstrated in the examples above, modern computer equipment is more than capable of handling a broad range of operating conditions. The reality is that if your operators are comfortable in the space, your computer equipment will run just fine. The biggest benefit of simply raising your space temperature is that it comes with no capital cost so your payback is instantaneous.

The next article in the series will focus on what can be done within the white space to provide energy savings. It will primarily look at containment and some other simple energy conservation measures that can be implemented to save money.

Written by: Raymond (Ray) F. Johnson II, PE, Senior Associate Principal